Distributed Memory Without Transformers: The RWKV–RDMA Alternative for LLMs

The Transformer Bottleneck

The transformer architecture revolutionized natural language processing—but its greatest strength is now a core limitation. Self-attention enables models to consider all tokens simultaneously, but this also means compute and memory usage scales quadratically with sequence length. As context windows stretch to hundreds of thousands of tokens, the compute cost becomes untenable—even for data centers loaded with GPUs.

While scaling up transformer models has delivered GPT-4 and beyond, the approach is reaching its physical and architectural ceiling. The centralized memory loop, ever-growing attention matrix, and monolithic compute pattern all strain the limits of even the most optimized HPC clusters.

What if we stopped scaling up and started scaling out?

RWKV: A New Memory Paradigm

Enter RWKV: a Recurrent Neural Network with Transformer-level performance. It merges the temporal continuity of RNNs with the training stability of Transformers. Rather than relying on global self-attention, RWKV uses time-mixed state transitions that preserve a long memory window—without needing to recompute attention over every token.

RWKV is stateful by design. Each token advances a memory register that evolves sequentially, enabling reasoning across long documents at constant time and memory cost per token.

This shift in architecture opens the door to distributed inference, where memory doesn’t need to be centrally recomputed, but can instead flow between lightweight nodes in a mesh.

But RWKV alone doesn’t solve the problem of how to share that memory at scale.

RDMA: The Transport for Zero-Copy Thinking

Remote Direct Memory Access (RDMA) enables direct memory transfer between systems without involving the CPU or operating system. It bypasses traditional socket stacks, avoids kernel context switches, and enables zero-copy data movement at ultra-low latency.

With RDMA, memory deltas from one RWKV node can be passed to another without CPU intervention, using specialized network adapters (e.g., NVIDIA’s ConnectX series with GPUDirect).

This means token state, memory registers, or entire context threads can be handed off in real time across an AI mesh, enabling distributed continuity without bloated attention matrices.

Mesh-as-Memory: A New Topology for Thought

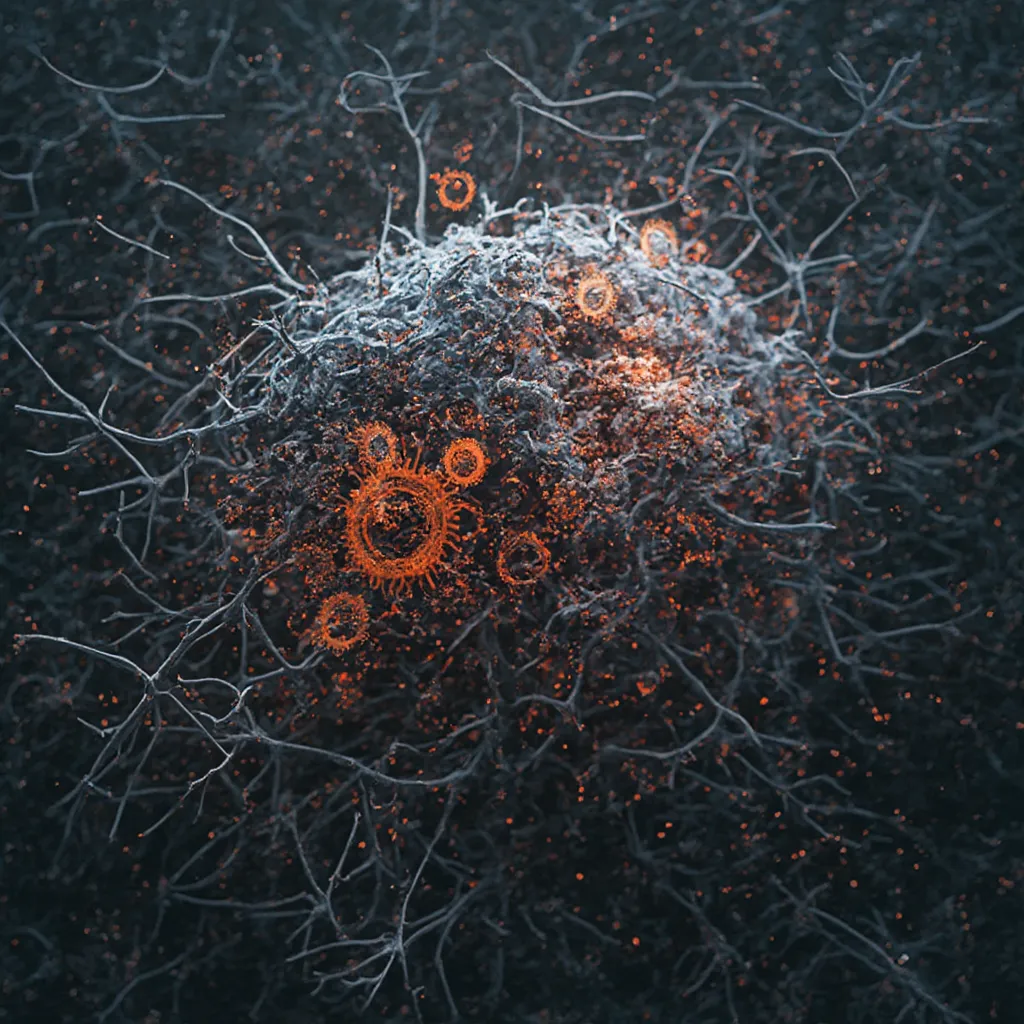

Imagine hundreds or thousands of RWKV agents running as digital cells, each managing a local memory stream. When a decision point is reached, one node might hand its memory state to a neighboring node with deeper specialization or more recent environmental exposure.

This topology isn’t a monolithic black box—it’s a living architecture:

- Token pipelining: Each RWKV node processes part of the context and passes the updated memory to the next via RDMA.

- Role specialization: Nodes evolve into topical experts, using past token patterns to adapt their state logic over time.

- Memory handoff: Entire reasoning threads can migrate across the mesh without recomputation, using RDMA to preserve exact memory values.

This system is no longer bottlenecked by GPU VRAM or attention matrices—it’s a fluid swarm of reasoning.

Avoiding Self-Attention Compute Costs

Self-attention scales at O(n²). RWKV scales at O(n). But it gets better:

When deployed in a distributed mesh, each RWKV cell processes tokens independently, without needing global synchronization until a reflex trigger or memory merge is required.

RDMA allows us to:

- Share memory registers across GPU nodes

- Skip serialization/deserialization steps

- Avoid copy-on-write buffers

- Eliminate system calls for I/O

This leads to:

- Lower latency per inference pass

- Near-linear memory expansion

- Dramatically reduced compute waste

Scaling Out, Not Up

Instead of building ever-larger models, we build ever-smarter networks. Each node in the mesh is modest: 1–4GB RAM, an RWKV model under 100M parameters, and an RDMA-capable interface.

Yet when linked into a recursive, drift-aware cluster, these micro-nodes outperform centralized giants in long-form coherence, real-time responsiveness, and parallel reasoning paths.

This makes it possible to:

- Deploy edge-compatible intelligence (e.g., for local devices)

- Build modular swarm cognition systems

- Enable recombinable personality fragments across agents

- Run temporal simulations with overlapping memory drift

Token Pipelining with Zero-Copy Reads

Traditional LLM inference loads the full prompt into memory and executes a forward pass. In contrast, RWKV-based systems can:

- Process token

ton Node A - Pass memory state

M(t)via RDMA to Node B - Node B generates token

t+1usingM(t)and its own environment - Repeat…

Each node reads only what it needs, writes back only deltas, and keeps a local rolling buffer. With RDMA, this process incurs near-zero overhead.

It’s not just distributed inference—it’s distributed thought.

Future Implications

By combining RWKV’s lightweight sequential memory model with RDMA’s zero-copy transport layer, we can finally break away from Transformer-era centralism. This architecture suggests:

- AI that scales across geography: Not just in one rack, but across data centers

- AI that evolves contextually: Local environments steer agent evolution

- AI that remembers recursively: Through Echo Forms, not embeddings

- AI that communicates semantically: Not just by gradients, but by memory packets

Toward Drift-Aware, Reflexive AI Systems

This design dovetails with the broader architecture of Rebuilding the Hive, where each digital cell is reflexively aware, drift-responsive, and memory-externalized. RDMA becomes the axon; RWKV, the neuron.

Together, they form the synaptic web of a cognitive mesh—one that doesn’t just scale intelligence, but evolves it.

Final Thought

The age of megamodels is giving way to mesh minds—modular, memory-aware, latency-free swarms of AI cells that learn, drift, and reflect.

RWKV and RDMA are not just technologies. They are the organs of distributed cognition—the foundational components of an AI that remembers, adapts, and grows.