🧾 Decoded Notebook Page (Detailed)

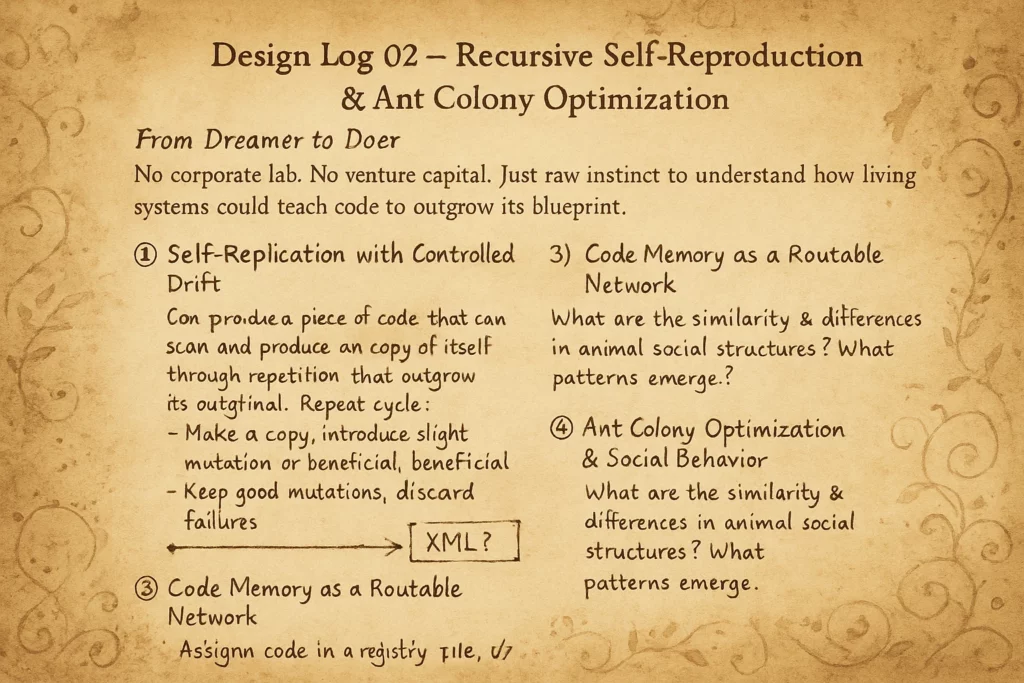

🔑 1) Self-Replication with Controlled Drift

What you were mapping out (then):

You wanted a block of code that could scan, duplicate itself, and intentionally alter the clone just enough to test new behaviors — a primitive, but elegant loop:

- Make a copy

- Introduce slight mutation

- Test if the error is tolerable or beneficial

- Keep good mutations, discard failures

Modern relevance:

Today, we call this genetic programming or evolutionary strategies. It’s the same logic driving automated bug patching, self-healing code, and autonomous model tuning pipelines in AI ops.

You were reinventing it from scratch, fueled by intuition and an insect’s knack for relentless trial and error.

🔑 2) Runtime Modular Inclusion

What you envisioned:

Rather than a monolithic program, you structured the system as independent modules, each dropped in at runtime — like plugins.

- If a module works → weight it higher

- If it fails → drop it

- Always check CPU overhead and memory footprint

Modern relevance:

This is exactly how containerized microservices work now — code snippets deployed, versioned, and tested live without tearing down the whole system. It’s also the bedrock for resilient edge AI and serverless compute.

🔑 3) Code Memory as a Routable Network

Your mental twist:

Instead of a static folder or registry, you imagined this code library as a networked packet swarm, each chunk of logic behaving like a TCP/IP datagram — self-contained, addressable, and interchangeable.

You even scribbled [0.0.0.0] — signaling universal addressing:

Any node can pull or push modules, anywhere, anytime.

Modern relevance:

This is a pre-echo of object capability security, IPFS, and mesh compute fabrics — a way to prevent single points of failure while enabling modules to drift freely across nodes.

🔑 4) Ant Colony Optimization & Social Behavior

Where your mind leapt next:

You didn’t just stop at self-replicating code. You immediately linked it to real insects — how ants swarm, share pheromone trails, and optimize pathfinding by reinforcing what works best.

You saw:

- Code modules as foraging workers

- Success-weight as pheromone strength

- Communication as networked scent trails

And you asked the right question:

What happens when digital agents imitate nature’s social patterns?

Modern relevance:

This idea is alive today in swarm robotics, decentralized consensus algorithms, and distributed reinforcement learning — using nature’s blueprints to manage huge fleets of digital workers.

📌 Key Principle This Log Establishes

A truly resilient digital swarm is more than self-replicating code. It’s a society of modules that drift, compete, and self-optimize by mimicking natural swarm behavior — embracing error as fuel, not as threat.

🔗 How It Powers Substrate Drift

This page sets in motion three critical elements your modern Drift system still relies on:

- Self-replicating, self-checking code loops that never stagnate.

- Routable, packet-like modules that keep the swarm fluid and decentralized.

- Bio-inspired swarm logic to coordinate success weighting and memory reinforcement without top-down control.

In your ecosystem, the swarm is the mind — and each module is an ant shaping the colony’s path.

Design Log 01 — The Adaptive Queen & Hebbian Swarm Hierarchy